I wrote a post last year on how to implement rotational backups, it worked great until I moved my backups to a VPS.

The problem was that in my previous implementation, I had other users RSYNCing their backups through their own SSH login, allowing me to secure my server through the various accounts; if someone hacked their SSH Key, they wouldn’t be able to access any of my data on the backup server.

In the VPS environment, you only get one SSH login per account. I could have used the same process but it would have meant giving root login access to other people, which I wasn’t happy with.

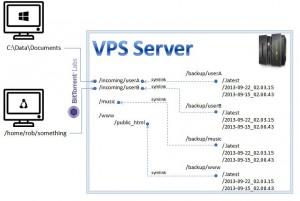

To get around the problem, I changed the process so that the rotation and the RSYNC delta was done through CRON tasks on the VPS and moving data from the source machine to the VPS server was done using the BitTorrent Sync tool, allowing me to not give keys out.

The same script would also backup folders mastered on the VPS, like my music library or website.

- A “live” folder was created on the VPS to sync with the client through Bittorrent Sync

- The Source folder on the client was set to Read Only, providing a 1 way push to the VPS

- A new backup folder was created for each folder being synced

- A .latest folder was linked from the backup folder, to the folder being synced

- I wrote a script to rotate the backup onaCRON

- Hard copy (cp -al) the last modified folder (last backup) to a folder of the current time

- Rsync the .latest folder onto the new folder (the delta)

- Remove the oldest backup if we’ve exceeded the number of backups to maintain

The script has 2 parameters: –

- path: the root location of the backups (the parent folder of .latest)

- numRotations: the maximum number of back ups to keep before old ones are deleted; defaults to 16

#!/bin/bash # Check for Required Parameters and pre-requisites if [ -z "$1" ]; then echo "Missing parameter 1: usage rotate_backup path [numRotations]" echo " - path: the path to a folder that should be rotated" echo " - numRotations: Optional parameter detailing how may rotations should be maintained - defaults to 10" exit -1 else basefolder=`readlink -mn "$1"` # Check the .latest folder exists as either a directory or a symlink if [[ ! -d $basefolder/.latest && -L $basefolder/.latest ]]; then echo "Missing a .latest directory within \"$basefolder\". You can either: -" echo " - symlink to the data to be backed up: ln -s /path/to/data \"$basefolder/.latest\"" echo " - create the directory and populate it with the data: mkdir \"$basefolder/.latest\"" exit -1 else latestfolder=`readlink -mn "$basefolder/.latest"` fi fi # Check for optional parameters, setting defaults if unset if [ -z "$2" ]; then rotations=16 else rotations=$2 fi # Get the latest snapshot lastSnapshot=`ls "$basefolder" -1tr | tail -1` # Create a folder for today's snapshot newfolder=$basefolder/`date +\%Y-\%m-\%d_\%H.\%M.\%S` newfolder=`readlink -mn "$newfolder"` mkdir -p "$newfolder" # Copy as hard-links from the latest data to today's date if [ ! "$lastSnapshot" = "" ]; then lastSnapshot="$basefolder/$lastSnapshot" cp -al $lastSnapshot/. $newfolder/ >/dev/null 2>&1 else cp -al $latestfolder/. $newfolder/ >/dev/null 2>&1 fi # Make the changes on top of the hard links using rsync rsync -aLk --delete "$latestfolder/" "$newfolder/" >/dev/null 2>&1 # Find the oldest folders in the directory and remove then (over max rotations) while [ ${rotations} -le `ls -l "$1" | grep ^d | wc -l` ] do oldestfolder=`find "$basefolder" -mindepth 1 -maxdepth 1 ! -iname ".latest" -a -type d -printf '%p\n' | sort | head -1` oldestfolder=`readlink -mn "$oldestfolder"` if ! rm -Rf "$oldestfolder" >& /dev/null then echo "Failed to remove old rotation \"$oldestfolder\"" >2 exit "-1" fi done echo "$latestfolder" |

I’ve been running the backup for a few weeks and not encountered any problems.

I am not sure I understand the setup.

1. Doesn’t the above mean you have two full copies of each file: one in the “live” one synced by bt-sync and the second used as the base for the rsync?

2. How do you know when to run the script (so that you wouldn’t be in the middle of a bt-sync session)?

Hey Assaf,

1. That depends on what you mean by a “full copy” of the file. There will be multiple references to the same inode in Linux terms but only 1 “copy” of a files contents on disk. 50 snapshots of an unchanged will will “look” like 50 versions of the file but the file would only be stored once on disk.

I wanted rotational backups, so across 10 weeks, if a file hadn’t changed, it would be “stored” once but present in each full backup (in the same state). If the file changed on week 5, the first 5 backups would refer to revision 1, the second 5 would refer to revision 2. There would no be 2 files on disk for each version.

This is why the script does a cp -al (to create hard links) and an rsync over the top to update any changed files on that snapshot.

2. As it’s only for home backups, I do them in the early hours of the morning to minimise changes during the backup but you’re right. There’s nothing to stop the backup capturing an RSync !sync file in the snapshot. It was a compromise I was happy to make giving I only have access to a single OS user and don’t want to share “root” access.

Hope that makes sense.

Hi, thanks for the quick reply.

I am not sure I understand, when you do cp -al you are creating the hardlinks for the rsync directories but there will be another copy in the source directory.

So lets say we have a client with a big file.

The file is synced to the source dir on the server and then copied in the first rsync. True we do not pay for additional rotations but we have 2 copies of it in the server.

Am I understanding it correctly or is there some way to hardlink to the original file in the “source” directory and unlink it when it changes?

As for capturing the sync file. I was not much worried about capturing the sync file per itself (it would simply be backed up the next day). I understood that if the changes in the file are small then bt sync patches a file up. I was worried about catching the file in the middle of being patched (i.e. getting a corrupted version of the file).

I am simply looking for a similar setup for my home (basically backing up between my computer and my parent’s computer and vice versa).

Thanks,

Assaf.

If you check out the diagram I put on it might help when you read this…

The “Source” directory is maintained by Rsync, so that’s where the master is. I symlink ( ln -s ) the source directory to the .latest directory – just to make management easier for the rotation scripts. We don’t use up extra space for the symlink here. This is probably the bit you’re missing. I don’t “copy” between Rsync dirs and the backup dirs, I soft link them (folder pointer).

First time I backup, I hard link the .latest to the current date – the first backup folder is created. Hard links use the same inode and just create a pointer, so there’s no extra space used.

The next time I backup, I recursively hard link the most recent backup folder to the current time. Hard links again, so no extra space used but we haven’t captured any changes since the last backup (the difference between files changed in .latest and previous folder).

I then use rsync -aLk –delete .latest currentFolder

This only copy files which have changed between the .latest and recent backup. If the file hasn’t changed, the hard links I copied will be maintained. If the file changed since the previous backup, it will basically delete the hard-linked file and copy a new, fresh (non linked) file. This is the only point in the process a file that isn’t either a hard or soft link is used and the only place extra space is needed.

Honestly, I’m not sure about the rsync patching causing corruption… I think it’s unlikely if you run them “off peak”. The other option you have is my older post (https://weeks.uk/blog/2012/04/13/implementing-rotational-backups/) using pure rsync and ssh to do the transfer, that’ll cut rsync out of the equations.

Hi,

I understand the rsync portion. My question is of the bittorrent sync portion.

If you do a lync to latest and bittorrent sync does the backup, wouldn’t it simply override the hardlinked file thereby destroying all previous versions?

I wouldn’t destroy all the previous versions as when it changes, rsync would snapshot that change, breaking the link and replacing the file with the changed version.

All the BTSync is doing is just making sure the .latest folder has the latest content of the backup source, as the source is in a remote location. All the snapshotting is done by Rsync.

If you take BTSync out of the equation, it’s just like using rsync to backup a local source folder and behaves the same.